Lora Explained And A Bit About Precision And Quantization

Lora Explained And A Bit About Precision And Quantization Youtube Papers resources lora paper: arxiv.org abs 2106.09685qlora paper: arxiv.org abs 2305.14314huggingface 8bit intro: huggingface. A comprehensive step by step breakdown of the bitsandbytes 4 bit quantization with the nf4 (normal float 4 bit precision) data type. this post intends to be a one stop comprehensive guide covering everything from quantizing large language models to fine tuning them with lora, along with a detailed understanding of the inference phase and decoding strategies.

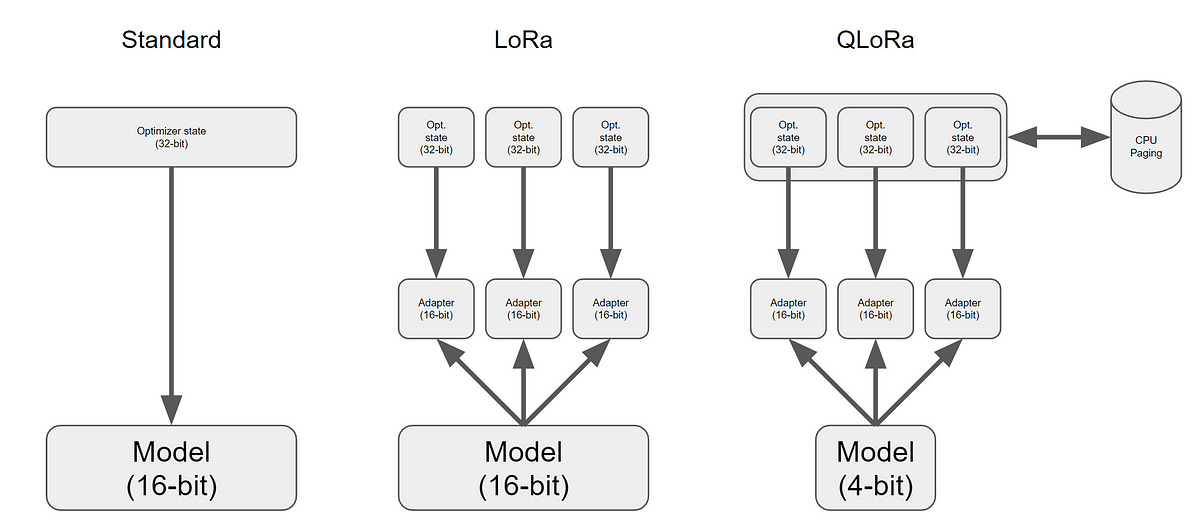

Meet Loftq Lora Fine Tuning Aware Quantization For Large Language Conclusion. quantization, along with advanced techniques like lora and qlora, is revolutionizing the way ai models are optimized for deployment. these techniques enable the creation of efficient and compact models that can run on a wide range of devices, from powerful servers to tiny edge devices, without significantly compromising performance. One of the most significant fine tuning llms that caught my attention is lora or low rank adaptation of llms. q in this name stands for quantization i.e. the process of reducing the precision. Fp16 or half precision floats have a ±65,504 range. for 4 bit quantization, the fp16 floats are normalized between a value of 0 to 1 and then this range is quantized into 16 equidistance buckets. so, during inference, instead of storing the fp16 value, a 4 bit bucket address of float value is stored in memory. Next, we perform lora training in 32 bit precision (fp32). at first glance, it may seem counterintuitive to quantize the model to 4 bits and then perform lora training in 32 bits. however, this is a necessary step. to train lora adapters in fp32, the model weights must be returned to fp32 as well. this process involves reversing the.

Qa Lora Quantization Aware Fine Tuning For Large Language Models Fp16 or half precision floats have a ±65,504 range. for 4 bit quantization, the fp16 floats are normalized between a value of 0 to 1 and then this range is quantized into 16 equidistance buckets. so, during inference, instead of storing the fp16 value, a 4 bit bucket address of float value is stored in memory. Next, we perform lora training in 32 bit precision (fp32). at first glance, it may seem counterintuitive to quantize the model to 4 bits and then perform lora training in 32 bits. however, this is a necessary step. to train lora adapters in fp32, the model weights must be returned to fp32 as well. this process involves reversing the. Lora’s adapters: a and b [2] note that the low rank is represented by the “r” hyperparameter.a small r will lead to fewer parameters to turn. while it will shorten the training time, it also. Quantization is an indispensable technique for serving large language models (llms) and has recently found its way into lora fine tuning. in this work we focus on the scenario where quantization and lora fine tuning are applied together on a pre trained model. in such cases it is common to observe a consistent gap in the performance on downstream tasks between full fine tuning and quantization.

What Is Lora Lora’s adapters: a and b [2] note that the low rank is represented by the “r” hyperparameter.a small r will lead to fewer parameters to turn. while it will shorten the training time, it also. Quantization is an indispensable technique for serving large language models (llms) and has recently found its way into lora fine tuning. in this work we focus on the scenario where quantization and lora fine tuning are applied together on a pre trained model. in such cases it is common to observe a consistent gap in the performance on downstream tasks between full fine tuning and quantization.

Comments are closed.